Transcript of Episode 55: Mo Jain

Disclaimer: Transcripts may contain some errors.

Grant Belgard: [00:00:00] Welcome to The Bioinformatics CRO Podcast. I’m Grant Belgard and joining me today is Mo Jain of Sapient. Welcome.

Mo Jain: [00:00:07] Thank you so much, Grant. Pleasure to be here today.

Grant Belgard: [00:00:10] So tell us about Sapient.

Mo Jain: [00:00:12] Absolutely, Grant. So Sapient is a discovery CRO organization which is really focused on biomarker discovery. And the way we operate is through leveraging novel technologies, particularly in the mass spectrometry sector in order to enhance human discovery. And we primarily serve as a partner for large pharma, early biotech, and even some foundations to help them in their biomarker discovery efforts as part of their drug discovery work.

Grant Belgard: [00:00:39] Can you tell us about the history of the company?

Mo Jain: [00:00:41] Yeah, absolutely. The concept of Sapient admittedly dates back almost two decades now. I’m trained as a physician, and one of the common questions that one receives when treating patients is, why did I get this disease? How do I know next time if this is going to happen to me? How can I protect my loved ones and family members? What are the diagnostic tests that I can use to know if I’m going to respond to this drug? And one of the really humbling aspects of medicine is, despite the massive amount of knowledge that’s been gained over the last several hundred years, really what we still understand and know represents a very, very small fraction of all the knowledge there is to know. And for most of these very insightful questions, the answer typically is I really don’t know the answer. And at this time, when I was in the midst of training, the human genome was really coming to fruition in the early 2000, when the initial draft of the human genome was reported, and genomics was going to revolutionize the world as we know it. And the basic idea behind this was by understanding the basic blueprints of human life. We could leverage that information to understand how healthy or not healthy you may be over the course of your existence, what diseases you were going to develop or predispose to, what drugs you were going to respond to, and essentially we would be able to transform the way we think about diagnosing and treating human disease.

Mo Jain: [00:02:06] The challenge has to do with the fact that however the amount of information and the type of information that’s encoded in the genome doesn’t actually enable that to happen in most cases. And at the time when I was in the midst of training and I apologize for the long answer, you’ll see where I’m going in a moment. But at the time of this, we were doing a thought exercise, and that is well if we could parallelize sequencing to the nth degree, and if we essentially could line up every single human on the planet, and we knew everything about everyone and we sequenced everyone’s genome, how much of human disease could we explain? And the hope would be 80, 90, 95, 98%. In actuality, when you look at the numbers and there’s many ways you can calculate this as a heritability index, population attributable risk index, etcetera. But the true answer is probably somewhere in the 10 to 15% range. And that’s a theoretical upper limit. If you really look in actuality, the numbers probably in the single digits for how much of human disease we can truly explain through sequence. And perhaps that’s not surprising that we know the way in which you live your life. Your genome is set from the moment to conception and the way you live your life. Everything you eat, drink, smell, smoke, where you live, how you live, we know is massively important in how healthy or not healthy you’re going to be over the course of your existence.

[00:03:25] And none of that information is captured in your underlying genome. And so I became very interested in that 85% of population attributable risk that’s not encoded in genetic sequence, understanding once again, diet, lifestyle, environmental factors, how one organ system may communicate with another organ system, how the microbiome that’s part of our gut and skin and saliva influences human disease. Again, none of that information is encoded in genetic sequence. But it turns out that that’s encoded in small molecule chemistry. So when you ate something for breakfast or lunch or dinner depending upon where in the world you are and what time it is, that gets broken down at your gut into small molecules. Those small molecules enter it into your bloodstream. And because we all eat only healthy things, they do good things in our organ systems and allow us to be healthy over time. And the basic premise is that well if we could capture that information, if we could take human blood and probe the thousands of markers that are floating around in human blood, we can begin to understand how humans interact with their environment both internally as well as externally, and leverage that information now in the way the genome was supposed to in understanding and predicting who’s going to develop what diseases over time, how long someone may live, whether or not I’m going to respond to a particular drug A versus drug B et cetera.

[00:03:25] So that was the basic premise. Now this is not a new idea. Every year you go to the doctor. They draw two tubes of blood, typically about 20ml of blood. And in that, we measure somewhere in the order of 12 things to 20 things depending upon the test you get. Half of those are small molecule biomarkers, creatinine and cholesterol levels, glucose etcetera. The challenge is that there’s tens of thousands of things floating around in your blood, and we’re literally capturing less than 0.1% of them. And so how do we develop technologies that allow us to very rapidly measure these thousands of things in blood, and to do this at scale across tens of thousands of people in a manner that allows us to discover, well, what are the next 12 most important tests? What are the next 12 after that? And how do we leverage this information at scale to to really predict and understand the human condition at its earliest disease points? And so that was the basic premise of Sapient. It was born out of academia, where we spent the last decade prototyping and developing these bioanalytical technologies. And as these were coming to fruition, we spun them out to form Sapient and that’s how we came to be today in.

Grant Belgard: [00:05:47] The work you’ve done at Sapient, have you seen a large number of complex, non-linear, non-additive interactions among factors, or are you finding the major signals are things that can be reduced to more simple and straightforward guidelines looking at LDL and HDL, new markers along those lines?

Mo Jain: [00:06:11] Yeah. The simple answer is both, which I recognize is not all that helpful. But it comes down to what type of predictive analytic you require, what’s the threshold that you require for actual diagnosis. Now often times for virtually all cases, you can reduce down information to a single marker or at least what I would say is a practical number of markers, somewhere below half a dozen that we can measure under the most stringent of laboratory methods clinically in hospitals around the world, and provides us the information we need to know. That works well. You can imagine in the same way, cholesterol is highly predictive of those individuals who are at risk for heart disease. Developing simple tests like that for cancer, Alzheimer’s disease, liver disease, lung disease, GI illnesses, pregnancy related complications, etcetera, etcetera is oftentimes quite functional. And that’s where we spend most of our time at Sapient. At the same time though, as you’re suggesting Grant, much of human disease is non-linear in its etiology. It’s rarely a single case or an additive case of two events that cause disease, but rather it’s a much more complex interaction of many, many different [inciting] etiologies. It may be a genetic predisposition, which increases risk somewhere in the order of several percentile. Added on with an environmental exposure, together with a particular initial acute insult that collectively results in a disease process cascading and starting. And so this is where we’ve become much more interested in taking these very complex data sources, where we’re measuring tens of thousands of things in human blood, and using much more advanced AI based statistic modeling now to be able to much more holistically predict and understand these complex interactions.

Grant Belgard: [00:07:48] How much added power do you see from that?

Mo Jain: [00:07:50] Yeah, quite a bit, which is both an incredible opportunity and is incredibly challenging. As you can imagine, in the same way if you look at a picture, a painting, oftentimes with a very small reductionist view of that painting where you’re looking at only several pixels, you can oftentimes tell something about the painting. This is a blue painting. It’s of the ocean or something to that extent, but with a very, very small snapshot. But as you took a much more global view of the underlying image, that’s where the real granularity begins to emerge. And as we go from simply saying, well, your risk of disease X is Y percentile or it’s increased in this manner to a much, much more holistic view of across these 100 different diseases, this is your sort of combinatorial risk. This is how you want to optimize life and diet. This is how you want to optimize medications specifically for you. This is how we want to develop new drugs. This is where allowing for that complexity is absolutely critical.

Grant Belgard: [00:08:52] Would you say this approach is more powerful for risk of onset of a disease that hasn’t yet occurred or for prognosis?

Mo Jain: [00:09:04] Yeah, it very much depends upon the disease and the biological question. And you can break these down into diagnosis, meaning early diagnosis prior to disease onset or at the earliest stages. Prognosis meaning once disease has become clinically apparent, understanding long term outcomes and then prediction regarding response to therapeutics, which is really the third component of this. And as you can imagine, the added value of more complex modeling versus reductionist testing of single molecules partially depends upon which of those three question baskets you’re in. And then also the specific disease and the complexity and heterogeneity of the underlying disease state. Now the good and bad is we do a relatively poor job of this today. And when you think about a complex disease, whether it be heart disease or diabetes, this probably represents half a dozen or more diseases, all of which have a common end phenotypic variable of metabolic insufficiency or hardening of your coronary arteries that were lumping all together, even though they have very different mechanisms of action that allowed someone to go from a normal stage to an abnormal stage. So even for these very heterogeneous, complex multi-organ systemic diseases, even being able to break it down into those broad categories, what are the four types of subgroups? What are the five types of subgroups can be extremely valuable? But now being able to take that even further and using more complex modeling, these AI based nonlinear approaches where you can be able to say well I’m not interested in the five subgroups, I’m interested in the 100 subgroups and understanding which one of these specific subgroups is going to be optimal for a particular therapeutic. This is where adding complexity and nuance becomes critical.

Grant Belgard: [00:10:43] What is the path to clinic look like for what you do?

Mo Jain: [00:10:45] So this is where it becomes really, really important. And this is absolutely an evolving area that’s changing literally week over week. It used to very much be five years ago that clinical translation had to be dependent upon a single test, a single molecule that was well measured that we could enter into what we call a CALEA Accredited Laboratory. And that was a one test, one diagnosis. That modality and that way of operating has completely changed over the last half decade. And we’ve seen this now. There’s something on the over 100 different tests that are at the FDA that use much more complex ML based algorithms or AI based algorithms for diagnostic purposes. We’ve already seen this in early pathology and histopathology and in radiology, and I wholly expect that the inflection is only starting now. So I suspect that over the near future here, I’m literally talking about the next several years, much more complex, nuanced, blood based testing is going to become the norm. We already see this in a number of conditions, whether it be diagnostic tests, whether it be Cologuard, for instance for colon cancer, whether it be a genetic testing for particular chemotherapeutics in the setting of cancer. We’re already seeing this evolution happening in real time, and I suspect that’s going to not spill over, but extend to virtually every single human disease.

Grant Belgard: [00:12:07] How much of the work you do is brought to you by clients or sponsored by partners versus internal R&D to develop these tests?

Mo Jain: [00:12:17] Yeah, it’s a great question Grant. We’re somewhat multi-personality if you will, let’s say, and that we’re a front facing CRO. So a good portion of what we do over 80% of our time and attention is really based upon servicing large pharma and early biotech and their drug development efforts and simply put there. We’re engaging these sponsors. They’re bringing biological samples to us. We’re analyzing them on our proprietary mass spec technologies, generating that data, doing the statistical analysis, making the discovery, and returning that discovery to them for commercialization as part of their drug development efforts. The other 20%, as you suggested is really based upon our internal R&D efforts. And so at the same time, because we have such ultra high throughput mass spectrometry systems that are capable of generating data faster than any other technology worldwide, we’ve also at the same time been able to go around the world and collect hundreds of thousands of biological specimens internally as part of our R&D efforts, analyze those samples, generate now what is the world’s largest human chemical database. And amalgamate that information in a centralized repository internally here at sapient that we now are subsequently mining for novel diagnostic purposes.

Grant Belgard: [00:13:28] What are the biggest challenges you face doing that?

Mo Jain: [00:13:30] Yeah, it’s a really good question. Up until several years ago, this would have been a simple technology issue. How do we actually generate the data? And I’m very glad to say that the efforts of Sapient have enabled us to generate now and handle data very, very quickly, meaning handling 100,000 to several million biological specimens for mass spectrometry analysis now is no longer a dream effort, but is very practical. It’s a daily ongoing here. So you can imagine that bucket now has been or that can has been kicked down a little bit of the road where it’s no longer a data generation issue. It comes down to a data understanding interpretation issue, whereby how do we take this complex data now and really commercialize it in a way that for the betterment of society. How do we develop the diagnostic tests that are going to be most meaningful. In many ways Grant, it becomes the kid in the candy store problem. If you have massive amounts of data, you can theoretically answer hundreds of questions simultaneously. And so what are the most high yield, high impact questions for different populations that we want to answer first and bring to clinical testing as quickly as possible? And that’s very much a personal sort of question.

[00:14:42] Obviously there’s a business use case behind it. But you can imagine, if you ask a particular foundation that operates in the rare disease space, they may have a particular preference. If you look across prevalence of disease across large populations of adults in the developed world, it’s a very different answer, may be heart disease and cancer, may be basic aging. And if you ask foundations that are operating in low to medium income countries, whereby there’s arguably the greatest need for human health and development, it’s obviously a very different set of questions around early childhood development, pregnancy nutrition and optimisation of in-field testing. So that’s one of the largest challenges that we face on a day to day basis. Now, certainly that’s a good problem to have. It’s very much a “first world problem” as to where you want to go first, and how do you want to operationalize and commercialize a very large data? But it’s a very real problem that I think many organizations that operate in this space are facing every day.

Grant Belgard: [00:15:40] Is there a system you use to make those decisions?

Mo Jain: [00:15:44] Yes, there is. And like the best of systems, you can imagine Grant that it oftentimes goes out of the window within the first three sentences of a discussion. So there’s certainly a lot of business use cases that we think through understanding what’s the addressable markets, what is reimbursed look like, these things that point us in particular directions. But at the same time, we’re fortunate enough just given how we operate, to have a little bit of leeway and the other questions that may be of equal if not greater importance, but may be of slightly less commercial value. And thinking through some programs that we have in understanding maternal nutrition in the developing world, programs that can have massive impact in large numbers of people that can move needles but may not have the same commercial relevance as coming up with a diagnostic test that tells us if we’re going to develop cancer in the next several years. Equally important, just slightly different commercial market.

Grant Belgard: [00:16:37] How do you think about causality or do you think about causality? Are you just really focused on strong associations? What’s the most predictive regardless of causal relationships?

Mo Jain: [00:16:46] It’s a fantastic question, and I’m happy to provide an answer. But ask me tomorrow and I’m sure I’ll give you a different answer. And as you can imagine, this is one of those things that fluctuates quite a bit. In the end, it depends upon what you’re using that information for. So let me give you an example, HDL is an extremely strong predictor, stronger than any other predictor for heart disease over time. But it’s still very questionable whether HDL itself is causal for coronary disease. We call it the good cholesterol, but likely what the evidence really points to is that HDL is reading out some other phenomenon that’s actually the causal agent. Now if I want to understand what my heart disease risk is over time, I just need a valid correlation that we know is specific and is statistically rigorous over time. And so HDL serves that purpose for me. Now, if I want to develop a drug and I want to use as a marker of drug efficacy HDL, well then having a causal association becomes much, much more important. And we spend quite a bit of time thinking through this process, because our goal is not only to come up with diagnostic markers, but to develop new drug targets and to validate those targets to develop new nutraceutical and natural product based therapeutics et cetera, et cetera.

[00:18:01] There’s a lot we can do with this type of data. And part of this has to do with understanding that causal question. And this is where we do quite a bit of multidimensional data integration, particularly with genomics information together with these small molecule biomarkers. In essence, doing a Mendelian randomization type of approach from which we may be able to infer causal relationships. And as you’re well aware having worked for many years in this space, MRI based analyses particularly MR bidirectional is very useful when it works, but in the absence of information, doesn’t necessarily negate causality. And so this is the way we certainly think about it. Again, it all has to do with how you want to use that data and what’s the objective function. In the end for a diagnostic test, it just a matter if it accurately diagnoses and predicts people who are at risk of a disease state.

Grant Belgard: [00:18:51] How important is longitudinal data for what you do?

Mo Jain: [00:18:54] Very, very important. And I think one of the lessons that we learned from the genomic revolution, well there’s several things that we learned. One, the genome as I suggested earlier on, really imparts a minority risk of human disease, who our parents are, what occurs at that moment of conception when a human is formed, that really provides only a very small amount of predictive capacity for what’s going to happen to us over the next 100 years of our existence. That’s the first lesson. The other lesson that we’ve learned is that human disease is a very dynamic process. Health is ever fluctuating. On different time scales certainly on a decade long time scale, but even on a day to day basis, in an hour to hour basis, when you really dive into the nuance, if I slept last night versus if I slept one hour last night, I probably have a different health state today. Now, the impact of that may not be relevant over many, many years, but certainly you could argue that I’m healthier because I slept or didn’t sleep, or if I ate correctly versus didn’t eat correctly. And anyone who’s ever gone out and had an interesting night and woke up with a hangover can agree with that. And so being able to understand that dynamic nature is critical. Now your genome for the most part, your somatic genome is fixed from the time of conception and doesn’t change over life. And this is where diving into dynamic market, particularly these small molecule dynamic markers that read out communication channels between our internal organ systems, between the external world and the world, and our internal sort of physiology between things like diet, lifestyle, microbiome, toxicants, etcetera, etcetera. This is where small molecule biomarkers are particularly important. And because of their dynamic nature, they have the ability to change quite quickly, which can read out almost in a real time fashion particular health and disease states.

Grant Belgard: [00:20:41] What challenges have you run into collecting longitudinal data and integrating that with clinical data? And I guess I’m going to ask a multi-pronged question here. Have you done this in health systems outside the US? And do you have and experience comparing and contrasting the data you get from different systems?

Mo Jain: [00:21:04] Yeah, as you can imagine, there’s a couple of different parts to the question, all of which are really important. I’m going to answer the final part first. I firmly believe that humans are all equal, but not identical. And one of the core components is where geographically in the world in which we live. And you know the famous quote that best summarizes this is that it’s not your genetic code, but it’s your zip code that’s a better predictor of disease. And statistically, that’s absolutely true. Based upon your geographic zip code, we typically have a better handle on your underlying long term risk of disease than anything else. And so certainly geography plays a huge role in this. It’s one of the core aspects of our interaction with the world. And you can imagine geography feeds into everything from the degree of sunlight, the type of water, the type of diet, toxicants that are local to that environment, socioeconomic state and access to health care. There are so many aspects that are fed into underlying geography. And so this is where our ability to broadly biological samples as well as individuals from around the world has been critical. And so as you can imagine, there’s value in identification of universal diagnostic tests that work independently of where you are. If you’re in Sub-Saharan Africa, if you’re in sunny San Diego, if you’re in Western Europe, it doesn’t matter. The test reads out what it should read out.

[00:22:19] And there’s also secondarily value in having localized or population specific tests, not something that traditionally in medicine we’ve done. But if it’s the case that there’s particular exposures that are unique to a given environment, those are a key determinant of a given disease in a particular location. To not sample that information and use it is silly to me. And so we oftentimes are looking for both of these. What you can find is there’s certainly universal realities and universal markers that denote health and disease states over time or drug response. But there’s also geographically localized markers that may be unique in specific populations, owing once again to diet or whatnot that may be unique to that environment. And I think both of them have the value in understanding the human condition. There’s certainly some practical issues and considerations. We’ve been very fortunate to have a number of relationships with top academic medical centers around the world. And the simple answer is that there’s more biological specimens and there’s more data available in the world than people are using. So it’s there if you’re willing to work within the constraints of the legal constraints of accessing it and whatnot. The real challenge is one that I suspect you’re alluding to, that everyone who works in the large data analytics space has learned one way or the other over the last decade, and that is garbage in, garbage out. I don’t care how good your metrics are. If the data is fundamentally not clean, and if you’re not conditioning on high quality data then you’re just leading yourself astray.

[00:23:53] Now, that doesn’t mean data has to be pristine. I’m actually quite a bit of a fan of using real world data because you want there to be noise. When you see signal emerging from that noise, you have much more confidence that that signal is real, as opposed to pristine data that may be present only in a phase three clinical trial. And then when you extend those same markers to the real world, you see that they have less of an effect that they should, simply because there’s now other confounding factors. But in the end, as much as I’m a fan of our technologies and the type of data we generate, having clean phenotype data is absolutely essential. And so we spend quite a bit of time internally here at Sapient, thinking through ways in which we can clean human data. We can QC that data, we can sanity check it. And ensure it’s of the highest quality otherwise you’re just leading yourself astray. And certainly there’s very, very large data assets out there and data sets out there that are of less than stellar quality. And oftentimes those don’t result in any real meaningful discovery.

Grant Belgard: [00:24:52] Are there any go to external datasets that you’ll look to for validation of what you’re seeing at Sapient?

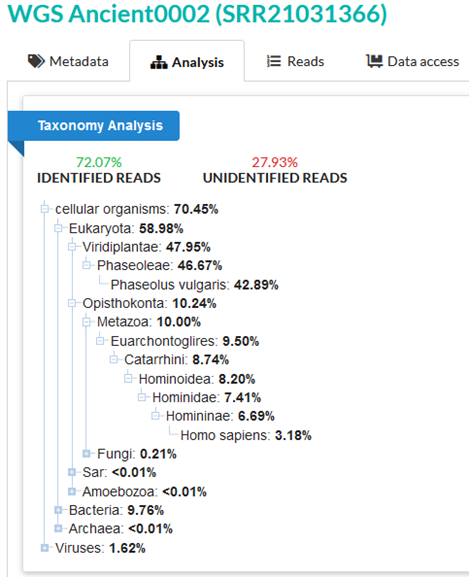

Mo Jain: [00:25:01] Yeah, it’s a really good question Grant. And this is one I’ve been in the challenges from an R&D perspective for us personally in that when you think about the molecules that are floating around in your blood right now. There’s tens of thousands of these molecules floating around in you Grant. Somewhere in the order of less than 5% of these have ever been measured, analyzed, structurally elucidated, or understood in any meaningful way, which means more than 95% of what’s in your blood right now is a black box. And so this is where I have challenged sometimes using external sources for validation, because they’re very much couched within that 5%. This is the same as the light pole, if you will effect where everyone is looking under that same light shade or lamppost at the same several dozen to several hundred molecules, whether it be genetic factors or small molecule biomarkers or protein biomarkers when the real signal lies outside of that initial light. And I’m a big fan of jumping into the dark, even if it’s sometimes a little bit challenging. And so what this ultimately ends up meaning is that we end up doing quite a bit of validation ourselves, simply because the current publicly available data assets, or even proprietary private data assets are really not of a nature that allows us to adequately validate or not simply powered for true discovery in this space.

Grant Belgard: [00:26:22] What is the future hold for Sapient?

Mo Jain: [00:26:24] Yeah, it’s a great question. The simple answer is I frankly don’t know. There’s obviously many things that we’re hoping to do. I very much believe in our service orientation and really accelerating the drug development process and pipeline together with our sponsors, whether they be large pharma organizations, small medium biotech foundations or governmental organizations. There’s tremendous value in that work that we see. And if we can help bring drugs to fruition, well, we’ve had a good day. At the same time as I mentioned earlier Grant, we’ve already generated the world’s largest human chemical databases, and they’re growing exponentially month over month. That provides some very, very unique opportunities that we have an obligation to bring to clinical translation, whether it be around new diagnostic tests, whether it be around better development and designing of clinical trials, whether it be an understanding and bringing means forward whereby we can predict who’s going to respond to particular therapeutics, whether it be developing natural pharmaceuticals, natural product pharmaceuticals themselves de novo. There’s a tremendous amount that we can do with these data assets. And that’s where I think Sapient is certainly going to continue to grow into the future. Now I hope as someone who’s aging hourly, today is actually my birthday Grant. So I’m aging more than [overlap]

Grant Belgard: [00:27:43] Happy birthday.

Mo Jain: [00:27:44] Well thank you, sir. Thank you. I just was alerted to that this morning. I forgot so I’m actually aging faster than I care to admit. But you know, I hope within the next several years to decade, diagnostic testing is completely different than where it is today. We’re no longer measuring two dozen molecules in human blood, but we’re measuring 20,000 molecules in human blood. And from that, being able to provide much, much more nuanced diagnostic information, prognostic information and therapeutic information regarding what’s the ideal way that I need to live my life, what’s the ideal diet, lifestyle and drug regimen that maximizes my personal health over time. And I’m hoping we’re moving to that position very quickly.

Grant Belgard: [00:28:27] So changing topics a bit, can you tell us about your own history, what in your background ultimately led to what you’re doing now? What prepared you for this? What maybe didn’t prepare you for this?

Mo Jain: [00:28:40] I wish I could say it was all very well planned out and deliberate, but you and I know that’s absolutely not the case. And so I trained as an MD-PhD. I was dual trained in medicine and science. My PhD degrees in molecular physiology. And I absolutely loved, loved, loved clinical medicine. It was a privilege to take care of patients. I very much enjoyed my patients. Those personal interactions and being able to help people in their own personal journey was something I was very passionate about as a cardiologist. I was also very frustrated by it, simply because much of clinical medicine is really about regressing to a common denominator or a common mean, whereby everyone with a given disease be treated with a given drug, even though we know that’s just not the way human medicine works, but it’s the best we can do. I was very frustrated by not being able to answer simple questions. When someone asks, well, why did I develop a heart attack at age 40? And what’s going to happen to my kids? And how do I test them for this? And there’s a lot of hemming and hawing that happens from the physician, simply because the real answer is, I have no idea. And there’s simply no testing we have for you. That, to me is very unacceptable.

[00:29:45] As I mentioned, I was training at the dawn of the genomic revolution and I was very much excited by this idea that parallelized sequencing and genomics were going to transform the universe in a meaningful way that this was all going to change. And at the same time, I was frustrated when saw that that wasn’t going to actually happen. When we really got down to brass tacks and did the calculations, it didn’t make sense how this was going to work. And so I trained initially in clinical medicine. I spent quite a bit of time in Boston at the Broad Institute, at MIT, at Harvard Medical School, and at Brigham and Women’s. In my scientific pursuits, that’s where I started working in mass spectrometry and large data handling. I spent the better part of the last decade as a professor in the University of California system here in San Diego as a professor, where I was privileged to work with really, really bright students and postdocs and faculty members to develop some of these technologies. And now I’ve lost track. I don’t know if it’s the third or fourth career, but this next phase or next adventure whereby we spun out the technology and now I have the privilege of leading this organization and thinking through how we begin to commercialize these technologies and these types of data. So it’s a very wandering path. This is oftentimes the case. I’m excited by big questions. I’m excited by solutions that bring about real change. And I’m still charting that path, if you will.

Grant Belgard: [00:31:08] And what have been the biggest surprises to you personally on your founder’s journey?

Mo Jain: [00:31:14] Oh, boy. How much time do you have Grant? I think there’s some universal surprises that I think anyone who goes through this process learns. You learn how hard it is. You learn that no matter how great your technology is, no matter how unique your data is, this is a people business in the end. And having the right people around the table is really the key to everything. Virtually all questions can be answered if you have the right people. You learn that it’s absolutely an emotional roller coaster. This is something that a number of founders had warned me about, but never really made sense to me that this is something that you’re going to have days where you’re flying high, and then literally an hour later, you’re on the ground in the fetal position, and that high frequency fluctuation is maddening. This is a hard business, if you will. There’s a lot less risky pursuits in life than being a founder and being an entrepreneur. But in the end, there’s frankly nothing more rewarding in my mind. And oftentimes these two things go together. I’m not necessarily a risk taker by nature, but I feel this is what I was meant to do so it makes sense to me in some crazy way that I can’t quite explain.

Grant Belgard: [00:32:27] If you could go back to early 2021 around the founding of Sapient and give yourself some advice, maybe three bits of advice, what would they be?

Mo Jain: [00:32:37] Yeah. Wow. That’s tough. I hope it’s not don’t do this, but I was incredibly naive when we founded Sapient. And I think that’s a good thing. I think sometimes knowing too much prevents people from taking a leap, and leaps require faith, and they require oftentimes blinders. You can’t see the pool if you’re going to jump into it.

Grant Belgard: [00:32:57] You need a bit of irrational optimism, right?

Mo Jain: [00:32:59] That’s exactly right. Anyone who knows me knows I suffer from massive doses of optimism and so I’m not sure if I knew everything I know today. Certainly we would do things differently and whatnot, but I like the fact that I was naive. I think that was really an important aspect of our development, certainly of my own personal growth, but also for the company. Oftentimes coming into an enterprise with bias means you’re just going to do the same thing that the person before you did by the very nature of bias. And not having that experience forces you to question from a very first principle basis, every problem and come up with oftentimes solutions that may not be traditional in many ways more efficient. I think I would warn myself, if you will, just to answer the question about how difficult this is emotionally and psychologically, not something I appreciated. My job was to take care of people who are dying in the ICU. So I said, well, how hard can this be? And I was incredibly naive and ignorant to just how hard it is. But again, that’s not a bad thing. That’s a good thing. And lots of people had given me the advice of make sure you surround yourself with other founders, other CEOs, people going through this. You’ll need more “emotional support” than you’ve ever needed at any point in your life. And I’ve always liked to believe I was highly resilient and had strong emotional backbone, but that absolutely turned out to be true and in many ways has been the difference maker. And so I’ve certainly sort that out over the last year and have some incredible friends who are in this space who are in very, very different fields, who help me every day. I wish I had done that a little bit earlier. I think that would have saved a little bit of sanity and probably some gray hairs.

Grant Belgard: [00:34:43] I noticed just before recording that you’re a YPO member.

Mo Jain: [00:34:47] Yeah, that’s exactly right. I’m a true convert. And when I first heard about YPO, I said this sounds nuts. I don’t need another networking event. And it was honestly a very dear friend of mine who we were having dinner with in the early days of Sapient, who just was a biotech entrepreneur himself and very successful, who looked over at me and said, hey, I’ve got something you need. And I said, oh man. He explained it to me and it sounds like a mix of a cult and a networking event, neither of which have the time or energy for. And my wife certainly at the time was like, wow, do you really want to get involved in something else? But I would honestly say, and again I know I sound like a true believer, but it’s been one of the most important things I’ve done for myself personally over the 40 years of my existence. And so it’s been incredibly helpful to learn from people who are very talented and successful at different phases of their life in a very open and honest way. And so it’s had massive impact on me, not only professionally, but personally.

Grant Belgard: [00:35:43] Yeah, I just went to a Vistage event this morning, so I know exactly what you’re talking about. Fantastic. Maybe third piece of advice. I think we’re on three.

Mo Jain: [00:35:53] Yeah, a third piece of advice would be to ground yourself. And what I mean by that, it’s really, really important. As life becomes more chaotic and crazy and I never thought it could get any crazier than it was previously, but somehow we’ve been able to pack more in. It’s really important to understand what your North Star is. It’s important to have those people in your life that you’re present for, whether it be family and children, whether it be spouses and friends, parents, whatever the case may be. It’s really, really important selfishly to have those grounding mechanisms. And again, I always understood how important it was, but not something I appreciated how important it is professionally. I’m a better professional individual and I think I’m a better founder and a better CEO because I take the time now for those individuals in my life and I ground myself, and that’s really important for me personally.

Grant Belgard: [00:36:46] I think it’s fantastic advice. Thank you so much for your time. I really enjoyed speaking with you. I think it’s been a fun discussion.

Mo Jain: [00:36:54] Thank you so much, Grant. I really appreciate the invitation and I equally had a lot of fun today. So looking forward to this again at some point in the future.

Grant Belgard: [00:37:01] Thanks.